1. Abstract

The R&D of learning-based recognition and robotic manipulation systems are attracting much attention. Studies using deep neural networks (DNNs) particularly demonstrated outstanding performance in several research areas, and R&D combining robotics and DNNs is being actively pursued.

We developed a basic education kit which consist of a robotic system and a manual how to use this system. It allows beginners to experience system developing through operating a physical robot.

The kit can be implementing with any available hardware. In addition, any extension can be easily added to this system.

In terms of encouraging the increased use of the proposed system, we plan to have users customize the environment to match the employed object and share that information.

We developed a robotic grasping system using a DNN. The goal of this system is to grasp an object using a robotic arm grip, wherever the object is placed.

2. Requirements

- Ubuntu 16.04

- OpenRTM-aist-1.1.2-RELEASE

- CMake3.5.1

- TensorFlow 1.8.0 / TensorFlow-gpu 1.3.0 or later

- Keras

3. Installation

3.1 Software

RT-Middleware

Download OpenRTM-aist for C++ and JAVA.

Source and binary packages for Linux and Windows are available from here.

TensorFlow

Follow the installation instructions of the official website.

Keras

Follow the installation instructions of the official website.

RT components (RTCs)

We used 4 different RTCs to test our system, for detailed information, please see Section 4. System Design.

ArmImageGenerator:https://github.com/ogata-lab/ArmImageGenerator.git

MikataArmRTC:https://github.com/ogata-lab/MikataArmRTC.git

WebCameraRTC:https://github.com/sugarsweetrobotics/WebCamera.git

KerasArmImageMotionGenerator:https://github.com/ogata-lab/KerasArmImageMotionGenerator.git

3.2 Hardware

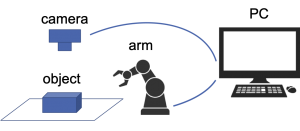

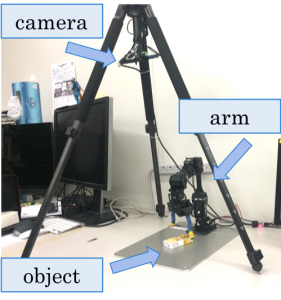

Experimental Environment

The required hardware for the system operation includes: a robot arm (i.e., the manipulator), a camera (i.e., the sensor), and an object for grasped.

For example, we implemented as follows:

・Robot Arm with six degrees of freedom and a two-finger gripper (Mikata Arm, ROBOTIS-Japan)

・RGB Camera (HD Webcam C615n, Logicool)

・Tripod (ELCarmagne538, Velbon)

・Box of sweets (size: 95mm×45mm×18mm)

The RGB web camera was attached to the tripod above the arm.

In the DC and TE steps, the hardware was kept in the same position. It is recommended to the camera maintain the installation position during all steps.

4. System Design

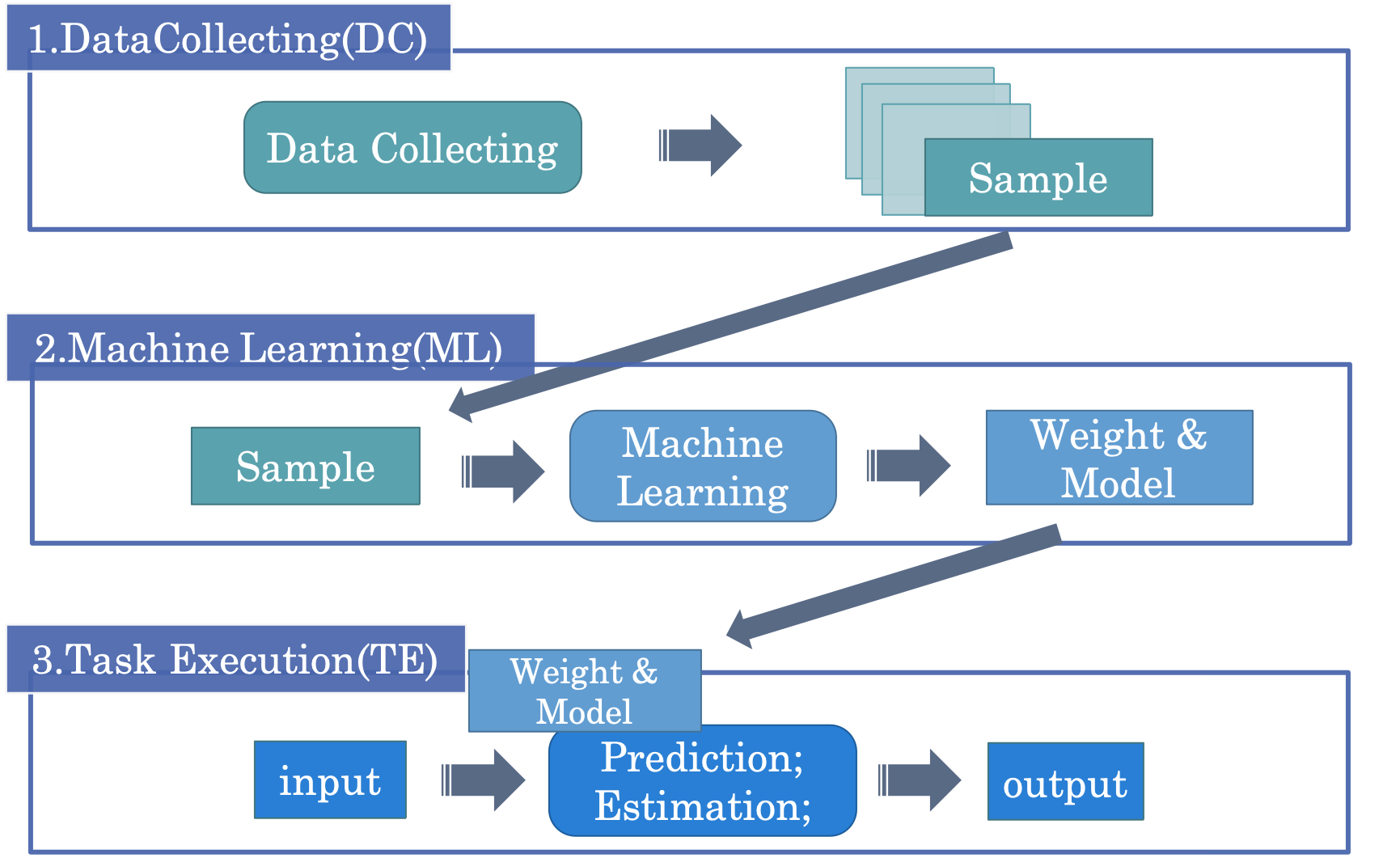

The process of this system can be divided into the three steps;

1) Collecting and Preprocessing Data (Date Collecting, DC)

2) The process of model train (Machine Learning, ML)

3) Tasks operating using the trained model (Task Execution, TE)

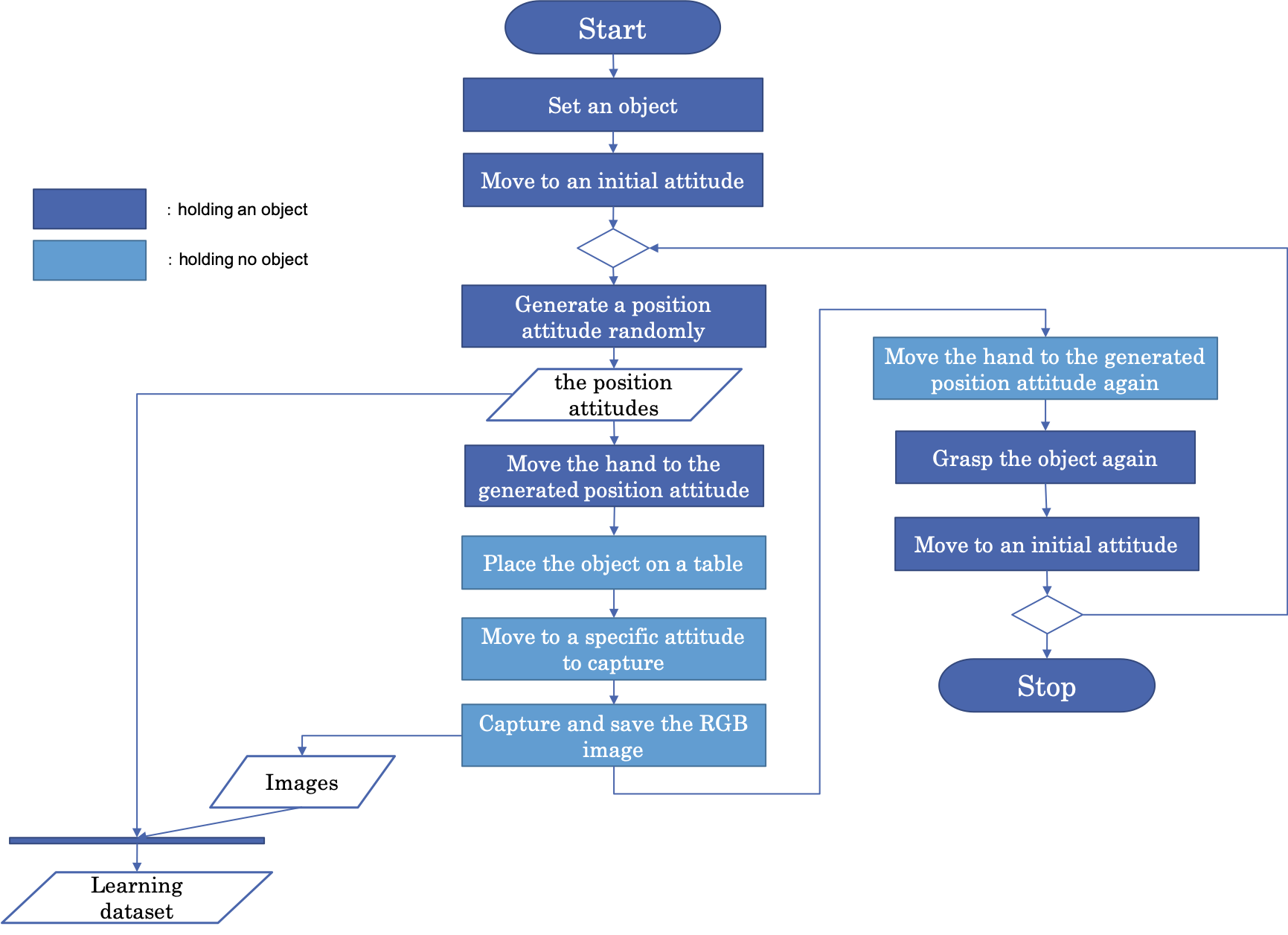

4.1 Date Collecting (DC)

In this step, a paired date includes RGB image from camera and the position of the object are collected.

Deep learning requires large amounts of data to train model. In this system, we developed a system can collect the dataset automatically. The procedure for our data collection system shows as follows:

1. Set an object within the working space of the robot arm.

2. Repeat the following steps.

(1) Move the gripper to a randomly generated position attitude (xy coordinate and rotation angle) on a table.

(2) Place the object at the position attitude on a table.

(3) Move to a specific attitude to capture the RGB image.

(4) Save both the coordinates of the RGB image and the position attitude in a file as a set.

(5) Grasp the object.

The DC system consisted of three RTCs; ArmImageGenerator,MikataArmRTC and WebCameraRTC.

ArmImageGenerator

This RTC commands automatic data collecting by operating the robot and saving RGB camera images and position attitudes (xy coordinate and rotation angle). This data is written a CSV file and saved in a folder (ArmImageGenerator/build/src/(date)/joint.csv).

ArmImageGenerator:https://github.com/ogata-lab/ArmImageGenerator.git

MikataArmRTC

This RTC is to control a robot arm with six degrees of freedom and a two-finger gripper, Mikata Arm (ROBOTIS-Japan).

MikataArmRTC:https://github.com/ogata-lab/MikataArmRTC.git

To install the git package:

$ git submodule update --init --recursive

WebCameraRTC

This RTC is to process camera data for image acquisition.

WebCameraRTC:https://github.com/sugarsweetrobotics/WebCamera.git

4.2 Machine Learning (ML)

We trained the vision-based grasping system closed-loop using the dataset collected in the DC step to build a model estimate a graspable hand position attitude of the object from the RGB camera image. We prepared a sample learning model using a convolutional neural networks (CNN), which was built using TensorFlow and Keras.

The sample script prepared is here.

We recommend creating a new folder and locating this sample file in it.

If you use this script, please edit ” log_dir = ‘/~/’ ” in L39 to specify the folder.

4.3 Task Execution (TE)

We constructed an image recognizer from the weights and model obtained in the ML step.

In this part, estimate a graspable hand position attitude of the object (xy coordinate and rotation angle) from the RGB camera image and grasp the target object.

The TE system consisted of three RTCs; KerasArmImageMotionGenerator,MikataArmRTC and WebCameraRTC.

KerasArmImageMotionGenerator

This RTC is to estimate a graspable hand position attitude of the object (xy coordinate and rotation angle) from the RGB camera image and to command a robot arm to this position.

First, you need to perform Idlcompile.sh or Idlcompile.bat to compile IDL files.

Please edit L2 ” -I”/Users/~/idl/” ” in these files to specify your folder.

KerasArmImageMotionGenerator:https://github.com/ogata-lab/KerasArmImageMotionGenerator.git

5. Usage

5.1 Data Collecting (DC)

Here is an example of a view on screen of OpenRTM-aist RT System Editor (RTSystemEditor) in DC.

The DC system runs by executing 3 RTCs; ArmImageGenerator,MikataArmRTC and WebCameraRTC , by connecting and activating those ports. The RTC configuration view is displayed at the lower center of the screen, for editing the parameters of individual RTCs.

To collect the data, activate all RTCs.

After starting the system, let the robot arm grasp the object.

When the system is finished, deactivate or exit ArmImageGenerator.

Check your collecting RGB camera images and the file ” joint.csv .”

5.2 Machine Learning (ML)

Execute the following command to train a model with collected dataset:

$ python sample.py

After training, copy 2 files: ” model_log.json ” and ” param.hdf5 ,” and locate them in the folder of KerasArmImageMotionGenerator.

5.3 Task Execution (TE)

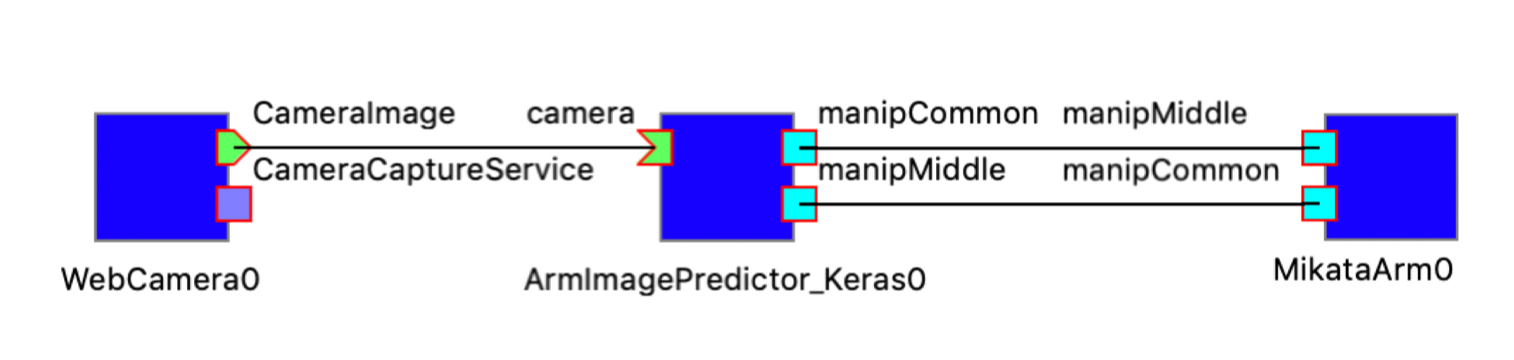

Here is an example screen of OpenRTM-aist RT System Editor (RTSystemEditor) in TE.

The TE system runs by running 3 RTCs; KerasArmImageMotionGenerator,MikataArmRTC and WebCameraRTC ,connecting and activating those ports.

You will see ” Hello?: ” on the terminal, running KerasArmImageMotionGenerator.

To execute the task, set the object on the table and press the Enter key on the terminal.

On completion of the process the task passes successfully.